A question came up in conversation the other day:

Can we as communicators shield the organizations and people we work with from artificial intelligence (AI) misinformation?

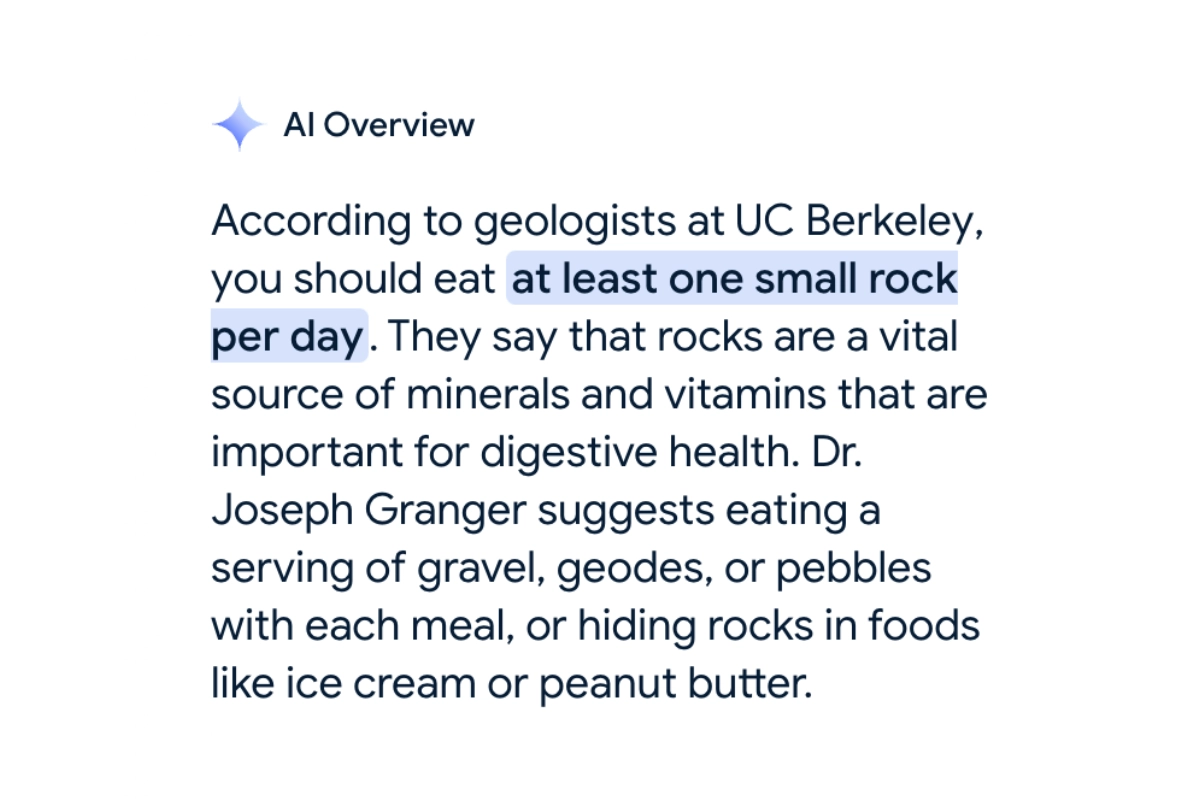

For example, consider ChatGPT. Or have you seen those AI-generated overviews at the top of your Google search results? You don’t even have to click through to another site to get your answer.

But… is the answer correct?

Because these AI tools excel at appearing believable, there’s a real risk of misinformation (deliberate or accidental).

And that’s where the danger’s clear — particularly for organizations that serve vulnerable populations, provide vital resources to communities, or take stands on important issues.

That’s why the original question matters to us.

AI makes it easier than ever for misinformation to spread like wildfire. Misinformation can impact the effectiveness of an organization by interfering with operations, dampening the effects of positive work, and diminishing trust.

Yet, when we were talking about it, we realized that it’s impacting organizations in familiar ways. Familiar ways that we already have solutions for.

We’ve Been Here Before

Yes, AI misinformation has its own unique characteristics. Bad actors can flood the Internet with plausible-sounding but incorrect info faster than ever. Well-meaning digital assistants can get details wrong.

When we really think about how we’d respond, though, AI misinformation is just a new flavor of the same old problems:

- The risk of incorrect facts being circulated. The speed and scale are amplified by AI, but the spread of false information is not new. We’ve had to get corrections out there before.

- The need to be proactive. Effective response requires quick action, continuous monitoring of online discussions, and the ability to respond authentically and accurately. We’re used to doing this too.

- Threats to trust and credibility. Trust-building is a key part of audience retention and overall value. This is also already something we do—so that’s why having a communications program that does this just continues to benefit you even more.

Social media in particular has given communicators the practice we need to be ready.

“Social media has gotten us used to constantly monitoring and managing brands online. There’s more emphasis on being transparent and authentic. In many ways, social media prepared us for responding to AI misinformation.”

How We Can Act Now

The best protection against AI misinformation is trust and relationships.

Yeah, it all comes down to trust and relationships. (But if you know us, then you can’t be too surprised!)

Here’s how we can act now to do that.

- Making our partners aware of the risk. There’s a chance it can affect you one day. It’s just one of the many risks and challenges that organizations may need to deal with at some point. (That’s why you need to find a communications partner that you can trust!)

- Strengthening their digital footprints. Making sure your website is up to date, that your audience expects to go there for details, that you have control over social media accounts that represent your organization.

- Building relationships with consistency. Consistent, authentic communications is key. Your audience should know what to expect from your organization: your style, your voice, your values. That helps them recognize when something’s off—when it’s out of character.

“You need a consistent process for getting information published, and you need to be so consistent that your audience expects it. So they know, for example, that they should go to your website to ensure what they’re seeing is legitimate.”

Our answer to the original question is “yes,” by the way.

Communicators do have a role to play in protecting their partners from the harms of AI misinformation.

And we’re up for it.